Data Structure & Algorithms

Intro to Data Structure & Algorithms

Data Structures are organized ways to store and arrange data so operations (insert, search, delete, traverse) can be performed efficiently. Algorithms are finite, well-defined step-by-step procedures to solve a problem or transform input to output. Together they form the core toolkit for writing software that is not only correct but scalable and maintainable.

Why they matter:

- Performance: Choosing the right structure can turn an O(n²) solution into O(n log n) or better.

- Resource efficiency: Good space management lowers memory pressure and costs.

- Reliability & clarity: Proper abstractions reduce bugs and cognitive load.

- Scalability: Enables systems to handle growth in users, data volume, or complexity.

Common data structure families:

- Linear: Arrays, Dynamic Arrays, Linked Lists

- Hierarchical: Stacks, Queues, Heaps, Priority Queues

- Associative / Lookup: Hash Tables, Maps, Sets

- Recursive / Hierarchical: Trees (BST, AVL, Red-Black, Trie, Segment, Fenwick)

- Graphs: Directed, Undirected, Weighted

- Specialized: Disjoint Set (Union-Find), Bloom Filter, LRU Cache patterns

Algorithm paradigms:

- Brute Force (baseline)

- Divide & Conquer (Merge Sort, Quick Sort)

- Greedy (Interval Scheduling, Huffman Coding)

- Dynamic Programming (Knapsack, LIS)

- Backtracking (N-Queens, Sudoku)

- Graph Strategies (BFS, DFS, Dijkstra, Topological Sort)

- Probabilistic / Approximation (when exact is expensive)

Measuring efficiency:

- Time complexity: Big-O (upper bound), Θ (tight), Ω (lower bound)

- Space complexity: Auxiliary memory beyond input

- Trade-offs: Faster lookup vs slower updates, memory overhead vs speed

Core goals of a good solution:

- Correctness

- Clarity

- Optimal or acceptable complexity

- Predictable behavior under scale

- Maintainability and adaptability

Real-world mappings:

- Autocomplete: Tries

- Social networks: Graph traversals

- Feed ranking: Heaps / priority queues

- Caches & sessions: Hash maps + eviction policies

- Conflict detection / geometry: Segment Trees / Interval Trees

- Compilers / interpreters: Abstract syntax trees

Mindset:

- Define the problem precisely (inputs, outputs, constraints).

- Identify dominant operations (reads, writes, lookups, aggregations).

- Estimate growth (n, m, depth, frequency).

- Choose structure → validate with complexity → prototype → measure.

A Priori Analysis vs A Posteriori Testing

Understanding performance has two complementary angles:

A priori (theoretical) analysis:

- Done before (or without) running code.

- Abstract machine model: count primitive operations (comparisons, assignments, hash lookups).

- Express cost as a function of input size n (and other parameters: m edges, k keys, h height).

- Derive worst, average, best cases; classify with Big-O / Θ / Ω.

- Ignores constant factors, cache effects, language/runtime overhead.

- Useful for design decisions, comparing alternative data structures, spotting impossible scalability.

A posteriori (empirical) testing:

- Run real (or synthetic) inputs and measure.

- Metrics: wall time, CPU time, allocations, memory peak, cache misses, branch mispredicts (if profiled), throughput, latency distribution (p50/p95/p99).

- Tools: benchmark harnesses, profilers, tracing, instrumentation counters.

- Reveals constant factors, hidden costs (boxing, GC, I/O), micro-architectural impacts.

- Validates (or falsifies) theoretical assumptions with real data distributions.

Why both matter:

- Theory filters out fundamentally non-scalable designs early.

- Measurement prevents overconfidence in asymptotics (e.g., O(n) with huge constant vs tuned O(n log n)).

- Discrepancies highlight modeling gaps (e.g., hash collisions, poor branching, memory locality).

Recursion

Definition and Core Concept

Recursion is a programming technique where a function calls itself to solve a smaller instance of the same problem. It's based on the mathematical principle of mathematical induction. Key Components:

Recursive Case: The function calls itself with a modified parameter Base Case: A condition that stops the recursion (prevents infinite calls)

How Recursion Works

The Call Stack

When a function calls itself:

- Each function call is pushed onto the call stack

- Parameters and local variables are stored for each call

- When a base case is reached, functions start returning

- The stack "unwinds" as each function completes

Memory Model

factorial(4)

└── factorial(3)

└── factorial(2)

└── factorial(1) ← Base case reached

└── returns 1

← returns 2 * 1 = 2

← returns 3 * 2 = 6

← returns 4 * 6 = 24Anatomy of a Recursive Function

def recursive_function(parameters):

# Base case(s) - ALWAYS check first

if base_condition:

return base_value

# Recursive case - problem reduction

# Must move toward base case

return some_operation(recursive_function(modified_parameters))Essential Elements:

- Progress toward base case: Each recursive call must bring you closer to the base case

- Problem decomposition: Break the problem into smaller, similar subproblems

- Combine results: Use the result of smaller problems to solve the larger one

Classic Examples

Example 1: Factorial

def factorial(n):

# Base case

if n <= 1:

return 1

# Recursive case

return n * factorial(n - 1)

# factorial(5) = 5 * 4 * 3 * 2 * 1 = 120Example 2: Fibonacci Sequence

def fibonacci(n):

# Base cases

if n <= 1:

return n

# Recursive case

return fibonacci(n - 1) + fibonacci(n - 2)

# fibonacci(6) = 8

# Sequence: 0, 1, 1, 2, 3, 5, 8, 13...Example 3: Binary Tree Traversal

class TreeNode:

def __init__(self, val=0):

self.val = val

self.left = None

self.right = None

def inorder_traversal(root):

# Base case

if not root:

return []

# Recursive case

left_vals = inorder_traversal(root.left)

right_vals = inorder_traversal(root.right)

return left_vals + [root.val] + right_valsTypes of Recursion

Linear Recursion: Function calls itself once per execution Examples: factorial, linear search Call stack grows linearly with input size

Binary Recursion: Function calls itself twice per execution Examples: Fibonacci, binary tree traversal Can lead to exponential time complexity

Tail Recursion: Recursive call is the last operation in the function Can be optimized by compilers to avoid stack growth Example:

def tail_factorial(n, accumulator=1):

if n <= 1:

return accumulator

return tail_factorial(n - 1, n * accumulator)Mutual Recursion: Two or more functions call each other

Example:

def is_even(n):

if n == 0:

return True

return is_odd(n - 1)

def is_odd(n):

if n == 0:

return False

return is_even(n - 1)Recursion vs Iteration

When to Use Recursion:

- Tree/Graph structures: Natural recursive structure

- Divide and conquer: Problems that break into similar subproblems

- Mathematical definitions: When the problem is naturally defined recursively

- Backtracking: Exploring multiple solution paths

When to Use Iteration:

- Simple counting/accumulation: Loops are more efficient

- Stack space concerns: Large inputs might cause stack overflow

- Performance critical: Iteration often has less overhead

Comparison Table:

| Aspect | Recursion | Iteration |

|---|---|---|

| Memory | Uses call stack (O(n) space) | Uses constant space |

| Speed | Slower (function call overhead) | Faster |

| Code clarity | Often more readable | Can be more complex for some problems |

| Stack overflow | Possible with deep recursion | Not an issue |

Common Patterns and Applications

Divide and Conquer

def merge_sort(arr):

if len(arr) <= 1:

return arr

mid = len(arr) // 2

left = merge_sort(arr[:mid])

right = merge_sort(arr[mid:])

return merge(left, right)Backtracking

def solve_n_queens(n):

def backtrack(row, board):

if row == n:

solutions.append([''.join(row) for row in board])

return

for col in range(n):

if is_safe(board, row, col):

board[row][col] = 'Q'

backtrack(row + 1, board)

board[row][col] = '.' # backtrack

solutions = []

board = [['.' for _ in range(n)] for _ in range(n)]

backtrack(0, board)

return solutionsDynamic Programming (with Memoization)

def fibonacci_memo(n, memo={}):

if n in memo:

return memo[n]

if n <= 1:

return n

memo[n] = fibonacci_memo(n-1, memo) + fibonacci_memo(n-2, memo)

return memo[n]Common Pitfalls and Debugging

Pitfall 1: Missing or Incorrect Base Case

# WRONG - no base case leads to infinite recursion

def bad_factorial(n):

return n * bad_factorial(n - 1) # Stack overflow!

# WRONG - base case never reached

def bad_countdown(n):

if n == 0:

return

print(n)

bad_countdown(n + 1) # n keeps increasing!Pitfall 2: Not Making Progress

# WRONG - parameter doesn't change

def stuck_function(n):

if n == 0:

return 0

return 1 + stuck_function(n) # n never decreases!Pitfall 3: Stack Overflow

Occurs when recursion depth exceeds system limits Python default: ~1000 recursive calls Solutions: Use iteration, tail recursion, or increase recursion limit

Debugging Techniques:

- Trace execution: Print parameters at each call

- Verify base cases: Ensure they're reachable and correct

- Check progress: Confirm parameters move toward base case

- Use small inputs: Test with minimal cases first

Performance Analysis

Time Complexity Examples:

- Linear recursion (factorial):

- Binary recursion (naive Fibonacci):

- Divide and conquer (merge sort):

Space Complexity:

- Call stack space:

- Tail recursion: if optimized

- With memoization: additional space

Advanced Concepts

Memoization Pattern

from functools import lru_cache

@lru_cache(maxsize=None)

def expensive_recursive_function(n):

if n <= 1:

return n

return expensive_recursive_function(n-1) + expensive_recursive_function(n-2)Converting Recursion to Iteration

# Recursive

def recursive_sum(arr, index=0):

if index >= len(arr):

return 0

return arr[index] + recursive_sum(arr, index + 1)Iterative equivalent

def iterative_sum(arr):

total = 0

for num in arr:

total += num

return totalParameterized Recursion Using additional parameters to avoid recomputation:

def power_optimized(base, exp, result=1):

if exp == 0:

return result

if exp % 2 == 0:

return power_optimized(base * base, exp // 2, result)

else:

return power_optimized(base, exp - 1, result * base)Print all subset of a array

def print_subsets(arr):

def backtrack(index, current_subset):

if index == len(arr):

print(current_subset)

return

# Include current element

current_subset.append(arr[index])

backtrack(index + 1, current_subset)

current_subset.pop() # backtrack

# Exclude current element

backtrack(index + 1, current_subset)

backtrack(0, [])

arr = [1, 2, 3]

print_subsets(arr)Print subsequences whose sum is k

Problem: Given an array and target sum k, find all subsequences that sum to k.

Approach: Use recursion with backtracking. For each element, we have two choices:

- Include the element in current subsequence

- Exclude the element from current subsequence

def print_subsequences_with_sum_k(arr, k):

def backtrack(index, current_subsequence, current_sum):

# Base case: processed all elements

if index == len(arr):

if current_sum == k:

print(current_subsequence)

return

# Choice 1: Include current element

current_subsequence.append(arr[index])

backtrack(index + 1, current_subsequence, current_sum + arr[index])

current_subsequence.pop() # backtrack

# Choice 2: Exclude current element

backtrack(index + 1, current_subsequence, current_sum)

backtrack(0, [], 0)

# Example usage

arr = [1, 2, 1]

k = 2

print_subsequences_with_sum_k(arr, k)

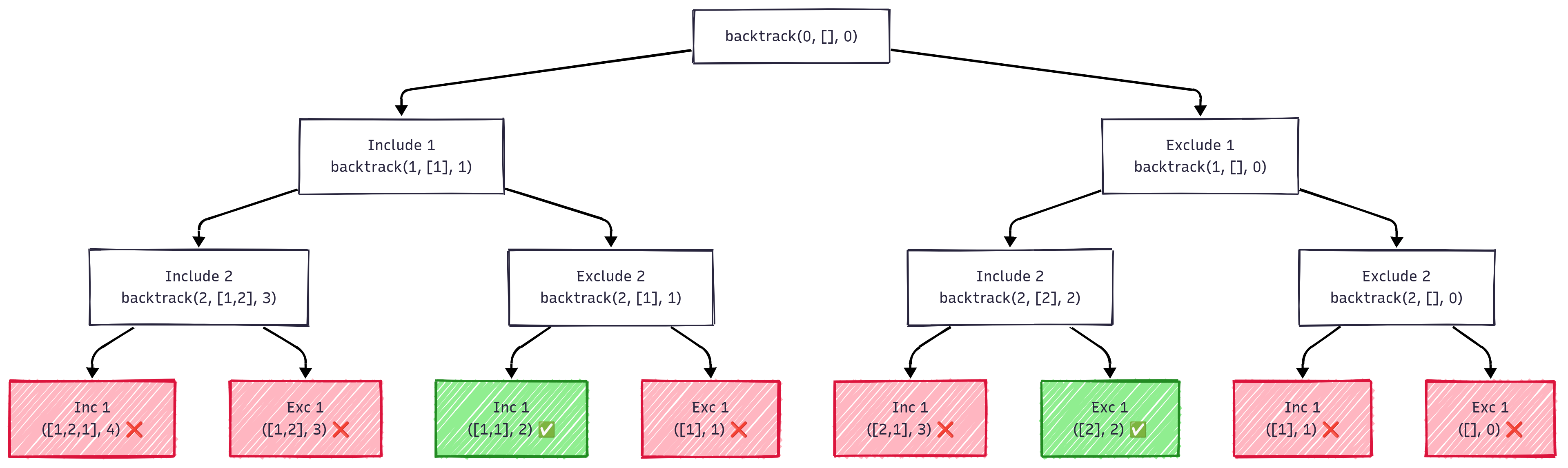

# Output: [2], [1, 1]Recursion Tree for array [1, 2, 1] with k=2:

Key Points:

- Time Complexity: - each element has 2 choices

- Space Complexity: - recursion depth and subsequence storage

- Pattern: Classic "include/exclude" decision tree

- Optimization: Can add pruning if (for positive numbers)

Optimized version with pruning:

def print_subsequences_with_sum_k_optimized(arr, k):

def backtrack(index, current_subsequence, current_sum):

# Pruning: if sum exceeds k, no point continuing (for positive arrays)

if current_sum > k:

return

if index == len(arr):

if current_sum == k:

print(current_subsequence[:]) # print copy

return

# Include current element

current_subsequence.append(arr[index])

backtrack(index + 1, current_subsequence, current_sum + arr[index])

current_subsequence.pop()

# Exclude current element

backtrack(index + 1, current_subsequence, current_sum)Count the Subsequences with Sum k

def count_subsequences_with_sum_k(arr, k):

def backtrack(index, current_sum):

if index == len(arr):

return 1 if current_sum == k else 0

# Include current element

count_including = backtrack(index + 1, current_sum + arr[index])

# Exclude current element

count_excluding = backtrack(index + 1, current_sum)

return count_including + count_excluding

return backtrack(0, 0)

# Example usage

arr = [1, 2, 1]

k = 2

print(count_subsequences_with_sum_k(arr, k)) # Output: 2Combination of Subsequences with Sum k

To find all combinations of subsequences that sum to k, we can modify our approach to collect valid subsequences instead of just counting them.

def find_combinations_with_sum_k(arr, k):

def backtrack(index, current_subsequence, current_sum):

if current_sum == k:

print(current_subsequence)

return

if index == len(arr) or current_sum > k:

return

# Include current element

current_subsequence.append(arr[index])

backtrack(index + 1, current_subsequence, current_sum + arr[index])

current_subsequence.pop()

# Exclude current element

backtrack(index + 1, current_subsequence, current_sum)

backtrack(0, [], 0)

# Example usage

arr = [1, 2, 1]

k = 2

find_combinations_with_sum_k(arr, k)Print all Permutations of a String/Array

def print_permutations(s):

def backtrack(path, remaining):

if not remaining or len(path) == len(s):

print(''.join(path))

return

for i in range(len(remaining)):

path.append(remaining[i]) # Choose

backtrack(path, remaining[:i] + remaining[i+1:]) # Explore

path.pop() # Un-choose

backtrack([], list(s))Optimized solution with swapping to avoid extra space:

def print_permutations_optimized(s):

def backtrack(start):

if start == len(s) - 1:

print(''.join(s))

return

for i in range(start, len(s)):

s[start], s[i] = s[i], s[start] # swap

backtrack(start + 1)

s[start], s[i] = s[i], s[start] # backtrack (swap back)

s = list(s)

backtrack(0)

print_permutations_optimized("ABC")Best Practices

Do:

- ✅ Always define clear base cases first

- ✅ Ensure each recursive call progresses toward the base case

- ✅ Use memoization for overlapping subproblems

- ✅ Consider iterative solutions for simple cases

- ✅ Test with small inputs first

Don't:

- ❌ Forget the base case

- ❌ Create infinite recursion

- ❌ Use recursion for simple iteration tasks

- ❌ Ignore stack overflow possibilities

- ❌ Modify global state without careful consideration

Binary Search

Binary search is a highly efficient algorithm for finding an item from a sorted list of items. It works by repeatedly dividing the search interval in half. If the value of the search key is less than the item in the middle of the interval, the search continues in the lower half, or if it's greater, in the upper half. This process repeats until the value is found or the interval is empty.

def binary_search(arr, target):

left, right = 0, len(arr) - 1

while left <= right:

mid = left + (right - left) // 2

if arr[mid] == target:

return mid # Target found

elif arr[mid] < target:

left = mid + 1 # Search in the right half

else:

right = mid - 1 # Search in the left half

return -1 # Target not foundBinary Search Recursively:

def binary_search_recursive(arr, target, left, right):

if left > right:

return -1 # Target not found

mid = left + (right - left) // 2

if arr[mid] == target:

return mid # Target found

elif arr[mid] < target:

return binary_search_recursive(arr, target, mid + 1, right) # Search in the right half

else:

return binary_search_recursive(arr, target, left, mid - 1) # Search in the left halfTime Complexity: O(log n) - Each step reduces the search space by half.

Tree Data Structure

A tree is a hierarchical data structure consisting of nodes, where each node contains a value and references to its child nodes. The topmost node is called the root, and nodes without children are called leaves. Trees are widely used in computer science for various applications, including representing hierarchical data, facilitating fast search operations, and organizing data for efficient access.

Types of Trees

- Binary Tree: Each node has at most two children (left and right).

- Binary Search Tree (BST): A binary tree where the left child's value is less than the parent's value, and the right child's value is greater.

- Full Binary Tree: Every node has either 0 or 2 children.

- Complete Binary Tree: All levels are fully filled except possibly the last, which is filled from left to right.

- Balanced Trees: Trees that maintain a balanced height to ensure efficient operations (e.g., AVL Tree, Red-Black Tree).

- Trie: A tree used for storing a dynamic set of strings, where each node represents a character.

- Heap: A complete binary tree that satisfies the heap property (max-heap or min-heap).

- Segment Tree: A tree used for storing intervals or segments, allowing efficient range queries and updates.

A Tree is typically traversed in two ways:

- Breadth First Traversal (Or Level Order Traversal)

- Depth First Traversals

- Inorder Traversal (Left-Root-Right)

- Preorder Traversal (Root-Left-Right)

- Postorder Traversal (Left-Right-Root)

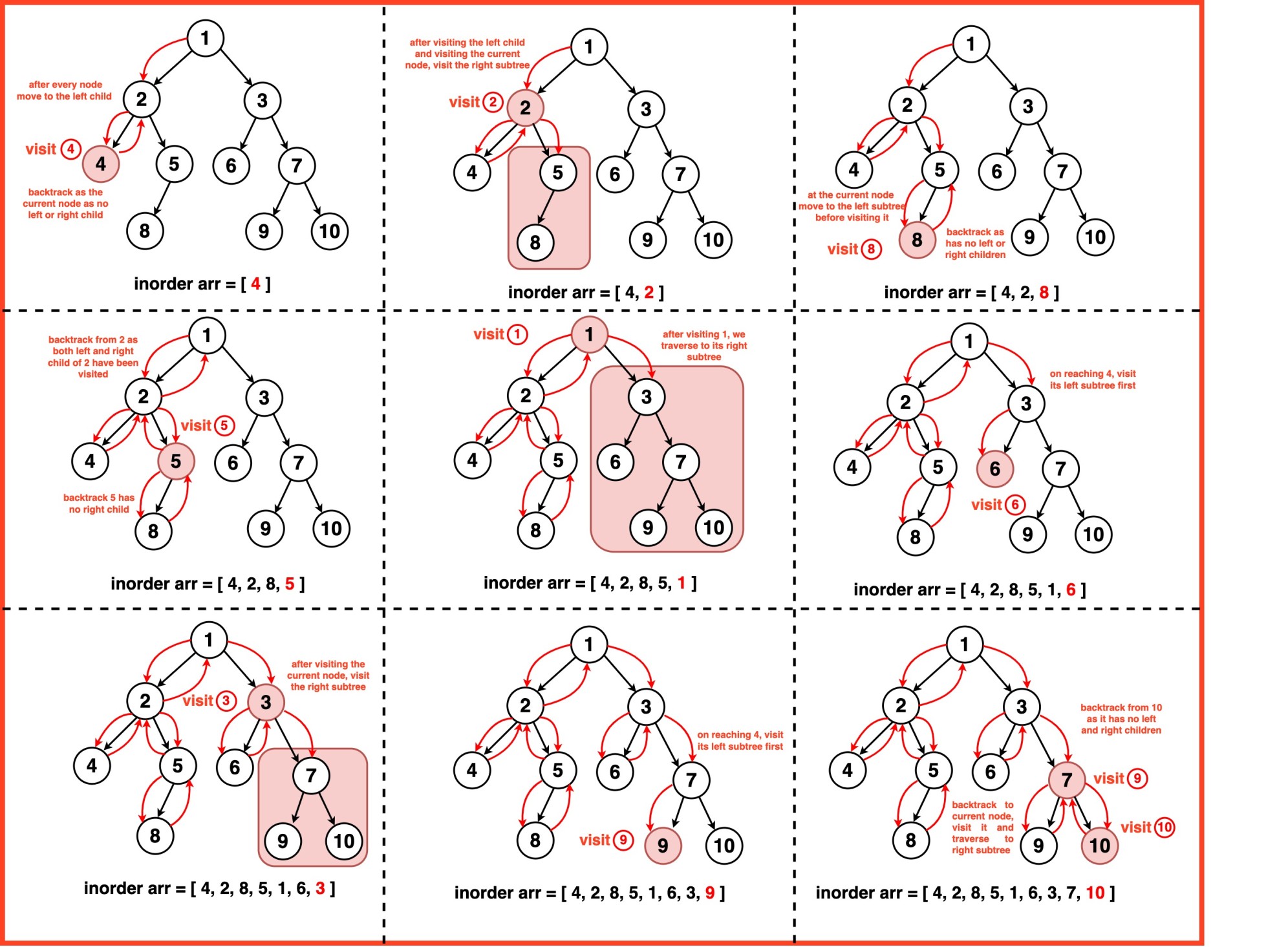

Inorder Traversal of Binary Tree

Inorder traversal visits nodes in the following order: left subtree, root node, right subtree. This traversal method is particularly useful for binary search trees (BSTs) because it retrieves values in sorted order.

class Node:

def __init__(self, key):

self.left = None

self.right = None

self.val = key

def inorder_traversal(root):

if root is None:

return

inorder_traversal(root.left)

print(root.val, end=' ')

inorder_traversal(root.right)

def inorder_traversal_iterative(root):

stack = []

current = root

while stack or current:

while current:

stack.append(current)

current = current.left

current = stack.pop()

print(current.val, end=' ')

current = current.right

# Example usage:

if __name__ == "__main__":

root = Node(1)

root.left = Node(2)

root.right = Node(3)

root.left.left = Node(4)

root.left.right = Node(5)

print("Inorder traversal of binary tree is:")

inorder_traversal(root) # Output: 4 2 5 1 3Postorder Traversal of Binary Tree

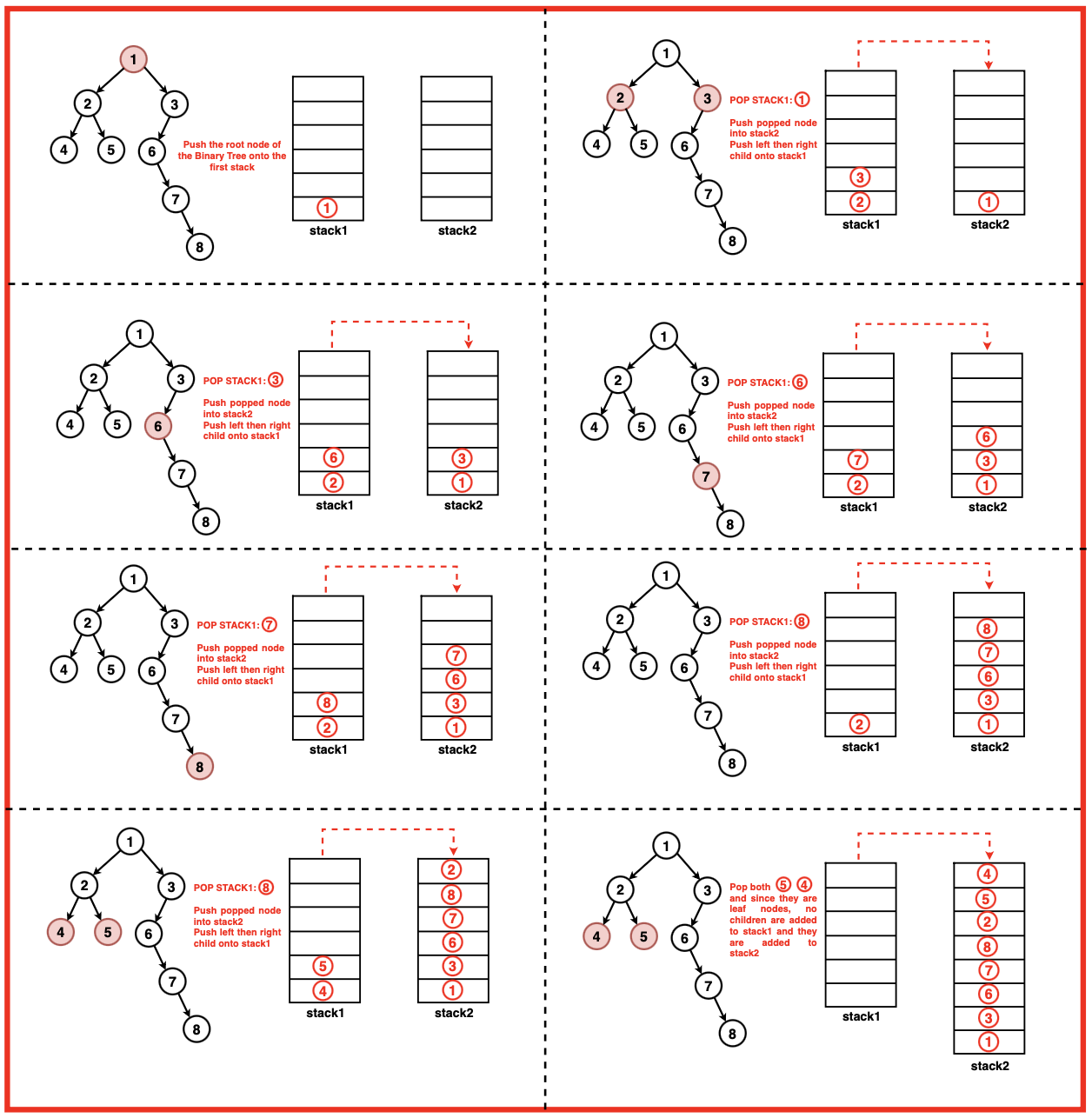

In postorder traversal, the nodes are recursively visited in this order: left subtree, right subtree, and then the root node. This traversal is particularly useful for deleting trees or evaluating postfix expressions.

class Node:

def __init__(self, key):

self.left = None

self.right = None

self.val = key

def postorder_traversal(root):

if root is None:

return

postorder_traversal(root.left)

postorder_traversal(root.right)

print(root.val, end=' ')

def postorder_traversal_iterative(root):

if root is None:

return

stack1 = [root]

stack2 = []

while stack1:

node = stack1.pop()

stack2.append(node)

if node.left:

stack1.append(node.left)

if node.right:

stack1.append(node.right)

while stack2:

node = stack2.pop()

print(node.val, end=' ')

# iterative version using one stack

def postorder_traversal_iterative_one_stack(root):

if root is None:

return

stack = []

current = root

while stack or current:

if current:

stack.append(current)

current = current.left

else:

temp = stack[-1].right

if temp is None:

temp = stack.pop()

print(temp.val, end=' ')

while stack and temp == stack[-1].right:

temp = stack.pop()

print(temp.val, end=' ')

else:

current = temp

# Example usage:

if __name__ == "__main__":

root = Node(1)

root.left = Node(2)

root.right = Node(3)

root.left.left = Node(4)

root.left.right = Node(5)

print("Postorder traversal of binary tree is:")

postorder_traversal(root) # Output: 4 5 2 3 1Preorder Traversal of Binary Tree

In preorder traversal, the nodes are recursively visited in this order: root node, left subtree, and then right subtree. This traversal is useful for creating a copy of the tree or for prefix expression evaluation.

class Node:

def __init__(self, key):

self.left = None

self.right = None

self.val = key

def preorder_traversal(root):

if root is None:

return

print(root.val, end=' ')

preorder_traversal(root.left)

preorder_traversal(root.right)

def preorder_traversal_iterative(root):

if root is None:

return

stack = [root]

while stack:

node = stack.pop()

print(node.val, end=' ')

if node.right:

stack.append(node.right)

if node.left:

stack.append(node.left)

# Example usage:

if __name__ == "__main__":

root = Node(1)

root.left = Node(2)

root.right = Node(3)

root.left.left = Node(4)

root.left.right = Node(5)

print("Preorder traversal of binary tree is:")

preorder_traversal(root) # Output: 1 2 4 5 3Height of a Binary Tree

The height of a binary tree is defined as the number of edges on the longest path from the root node to a leaf node. If the tree is empty, the height is -1. If the tree has only one node (the root), the height is 0.

class Node:

def __init__(self, key):

self.left = None

self.right = None

self.val = key

def height_of_tree_dfs_recursive(root):

if root is None:

return -1 # Height of empty tree is -1

else:

left_height = height_of_tree_dfs_recursive(root.left)

right_height = height_of_tree_dfs_recursive(root.right)

return max(left_height, right_height) + 1

def height_of_tree_bfs_with_depth(root):

if root is None:

return -1

from collections import deque

queue = deque([(root, 0)]) # (node, current_depth_in_edges)

max_height = 0

while queue:

node, depth = queue.popleft()

max_height = max(max_height, depth)

if node.left:

queue.append((node.left, depth + 1))

if node.right:

queue.append((node.right, depth + 1))

return max_height

def height_of_tree_bfs_level_order(root):

if root is None:

return -1

from collections import deque

queue = deque([root])

levels = 0

while queue:

for _ in range(len(queue)):

node = queue.popleft()

if node.left:

queue.append(node.left)

if node.right:

queue.append(node.right)

levels += 1

return levels - 1Lower bound and Upper bound in a sorted array

-

Lower Bound: The lower bound of a target value in a sorted array is the index of the first element that is not less than (i.e., greater than or equal to) the target. If all elements are less than the target, it returns the length of the array.

-

Upper Bound: The upper bound of a target value in a sorted array is the index of the first element that is greater than the target. If all elements are less than or equal to the target, it returns the length of the array.

def lower_bound(arr, target):

left, right = 0, len(arr)

while left < right:

mid = left + (right - left) // 2

if arr[mid] < target:

left = mid + 1

else:

right = mid

return left

def upper_bound(arr, target):

left, right = 0, len(arr)

while left < right:

mid = left + (right - left) // 2

if arr[mid] <= target:

left = mid + 1

else:

right = mid

return leftSegment Tree

A Segment Tree is a binary tree used for storing intervals or segments. It allows querying which of the stored segments contain a given point efficiently. It is particularly useful for answering range queries and performing updates on an array.

class SegmentTree:

"""

Segment Tree data structure for efficient range queries and updates.

Supports range sum queries and point updates in O(log n) time.

"""

class Node:

def __init__(self, start_interval: int, end_interval: int):

self.data = 0

self.start_interval = start_interval

self.end_interval = end_interval

self.left = None

self.right = None

def __repr__(self):

return f"Node([{self.start_interval}-{self.end_interval}], data={self.data})"

def __init__(self, arr: list[int]):

if not arr:

raise ValueError("Array cannot be empty")

self.root = self._construct_tree(arr, 0, len(arr) - 1)

def _construct_tree(self, arr: list[int], start: int, end: int) -> Node:

"""

Recursively construct the segment tree.

Args:

arr: Input array

start: Start index of current segment

end: End index of current segment

Returns:

Root node of the constructed subtree

"""

if start == end:

# Leaf node

leaf = self.Node(start, end)

leaf.data = arr[start]

return leaf

# Create new node for current segment

node = self.Node(start, end)

# Avoid potential overflow with this mid calculation

mid = start + (end - start) // 2

node.left = self._construct_tree(arr, start, mid)

node.right = self._construct_tree(arr, mid + 1, end)

node.data = node.left.data + node.right.data

return node

def display(self):

if self.root:

self._display(self.root)

def _display(self, node: Node):

parts = []

if node.left:

parts.append(f"Interval=[{node.left.start_interval}-{node.left.end_interval}] and data: {node.left.data} => ")

else:

parts.append("No left child => ")

# Current node

parts.append(f"Interval=[{node.start_interval}-{node.end_interval}] and data: {node.data}")

if node.right:

parts.append(f" <= Interval=[{node.right.start_interval}-{node.right.end_interval}] and data: {node.right.data}")

else:

parts.append(" <= No right child")

print(''.join(parts) + '\n')

if node.left:

self._display(node.left)

if node.right:

self._display(node.right)

def query(self, qsi: int, qei: int) -> int:

"""

Perform range sum query.

Args:

qsi: Query start index

qei: Query end index

Returns:

Sum of elements in range [qsi, qei]

"""

return self._query(self.root, qsi, qei)

def _query(self, node: Node, qsi: int, qei: int) -> int:

"""

Recursively perform range query.

Args:

node: Current node

qsi: Query start index

qei: Query end index

Returns:

Sum for the queried range

"""

# Node completely lies inside query range

if node.start_interval >= qsi and node.end_interval <= qei:

return node.data

# Node completely outside query range

if node.start_interval > qei or node.end_interval < qsi:

return 0

# Partial overlap - query both children

return self._query(node.left, qsi, qei) + self._query(node.right, qsi, qei)

def update(self, index: int, value: int):

"""

Update value at a specific index.

Args:

index: Index to update

value: New value

"""

self._update(self.root, index, value)

def _update(self, node: Node, index: int, value: int) -> int:

"""

Recursively update the value at given index.

Args:

node: Current node

index: Index to update

value: New value

Returns:

Updated data value for current node

"""

# Check if index lies in current node's range

if index >= node.start_interval and index <= node.end_interval:

# Leaf node - update the value

if index == node.start_interval and index == node.end_interval:

node.data = value

return node.data

# Internal node - update children and recalculate

left_ans = self._update(node.left, index, value)

right_ans = self._update(node.right, index, value)

node.data = left_ans + right_ans

return node.data

return node.data

if __name__ == "__main__":

# Create segment tree

arr = [1, 3, 5, 7, 9, 11]

seg_tree = SegmentTree(arr)

print("Segment Tree Structure:")

seg_tree.display()

# Query examples

print("\n--- Range Queries ---")

print(f"Sum of range [1, 4]: {seg_tree.query(1, 4)}") # 3+5+7+9 = 24

print(f"Sum of range [0, 2]: {seg_tree.query(0, 2)}") # 1+3+5 = 9

# Update example

print("\n--- Update Operation ---")

print(f"Updating index 2 from {arr[2]} to 10")

seg_tree.update(2, 10)

print(f"Sum of range [1, 4] after update: {seg_tree.query(1, 4)}") # 3+10+7+9 = 29

print(f"Sum of range [0, 2] after update: {seg_tree.query(0, 2)}") # 1+3+10 = 14Greedy Methods and Algorithms

Fundamental Concepts and Definition

What are Greedy Algorithms?

Greedy algorithms are a class of algorithms that solve optimization problems by making locally optimal choices at each step, with the hope of finding a global optimum. The term "greedy" reflects the algorithm's myopic behavior - it grabs the best immediate option without considering future consequences. Programiz

Formal Definition: A greedy algorithm constructs a solution to an optimization problem by iteratively selecting elements that maximize (or minimize) some criterion function at each step , without reconsidering previous choices.

Core Principles The greedy paradigm operates on three fundamental principles:

- Incremental construction: Solutions are built piece by piece

- Irrevocable decisions: Once a choice is made, it cannot be undone

- Local optimization: Each decision optimizes based on current state only

Essential Properties of Greedy Algorithms

Deterministic Nature: Given the same input and selection criteria, a greedy algorithm always produces the same output, making it predictable and analyzable. No Backtracking: Unlike exhaustive search or dynamic programming, greedy algorithms never reconsider or modify previous decisions. This property leads to their efficiency but can also result in suboptimal solutions for certain problems. Simple Decision Rules: Greedy algorithms typically employ straightforward selection criteria such as:

- Maximum or minimum value selection

- Earliest or latest time selection

- Highest ratio or density selection

- Shortest or longest distance selection

Memory Efficiency: Most greedy algorithms require minimal auxiliary space, often or , as they don't need to store multiple solution states or subproblem results.

Algorithmic Characteristics

The general structure of a greedy algorithm follows this pattern:

GREEDY-ALGORITHM(problem_instance):

solution = ∅

candidates = INITIALIZE-CANDIDATES(problem_instance)

while solution is not complete and candidates ≠ ∅:

x = SELECT-BEST(candidates)

candidates = candidates - {x}

if FEASIBLE(solution U {x}):

solution = solution U {x}

return solutionThe Greedy Choice Property

Definition: A global optimum can be arrived at by making locally optimal (greedy) choices.

Formal Statement: For an optimization problem with objective function and solution space , the greedy choice property holds if:

- partial solution , optimal solution such that contains the greedy choice made from state

Optimal Substructure

Definition: An optimal solution to the problem contains optimal solutions to subproblems.

Mathematical Formulation: If is an optimal solution to problem , and can be decomposed into subproblems , then contains optimal solutions to these subproblems.

Classic Greedy Algorithm Examples

Activity Selection Problem

Problem Statement: Given n activities with start times sᵢ and finish times fᵢ, select the maximum number of non-overlapping activities.

Mathematical Formulation:

- Input: Activities with intervals

- Output: Maximum subset such that ∀ ,

Algorithm:

- Sort activities by increasing finish time.

- Iteratively pick the next activity whose start time ≥ last selected activity's finish time.

- This greedy choice (earliest finishing first) yields an optimal solution.

def select_max_activities(activities):

"""

activities: list of (start, finish) pairs representing [s, f)

returns: list of selected (start, finish) pairs forming a maximum-size compatible set

"""

# 1) Sort by finish time

activities = sorted(activities, key=lambda x: x[1])

selected = []

last_finish = float("-inf") # allows picking the first interval

# 2) Greedily select non-overlapping intervals

for s, f in activities:

if s >= last_finish: # [s, f) allows s == last_finish

selected.append((s, f))

last_finish = f

return selected

# Example

if __name__ == "__main__":

acts = [(1, 4), (3, 5), (0, 6), (5, 7), (8, 9), (5, 9)]

chosen = select_max_activities(acts)

print("Selected:", chosen) # e.g., [(1, 4), (5, 7), (8, 9)]

print("Maximum count:", len(chosen)) # 3Time Complexity: for sorting + for selection = Space Complexity: for storing selected activities

Knapsack Problem (Fractional)

Problem Statement: Given n items with weights and values , and knapsack capacity , maximize value while respecting weight constraint. Fractional items allowed.

Mathematical Formulation: Maximize: Subject to: , where

Greedy Strategy

- Sort items by value density in descending order.

- Take whole items while capacity allows; take a fraction of the first item that doesn't fit.

def fractional_knapsack(items, capacity):

"""

items: list of (value, weight)

capacity: knapsack capacity

returns: (max_value, picks) where picks = [(value, weight, fraction_taken), ...]

"""

# Sort by value density (value/weight), highest first

items = sorted(items, key=lambda x: x[0] / x[1], reverse=True)

value = 0.0

picks = []

for v, w in items:

if capacity <= 0:

break

if w <= capacity:

value += v

picks.append((v, w, 1.0))

capacity -= w

else:

frac = capacity / w

value += v * frac

picks.append((v, w, frac))

break

return value, picks

# Example

if __name__ == "__main__":

items = [(60, 10), (100, 20), (120, 30)]

W = 50

max_val, taken = fractional_knapsack(items, W)

print(f"Max value: {max_val:.2f}") # 240.00

print("Taken:", taken) # [(100, 20, 1.0), (120, 30, 1.0), (60, 10, 0.0)] or similar by densityTime Complexity: for sorting; space .

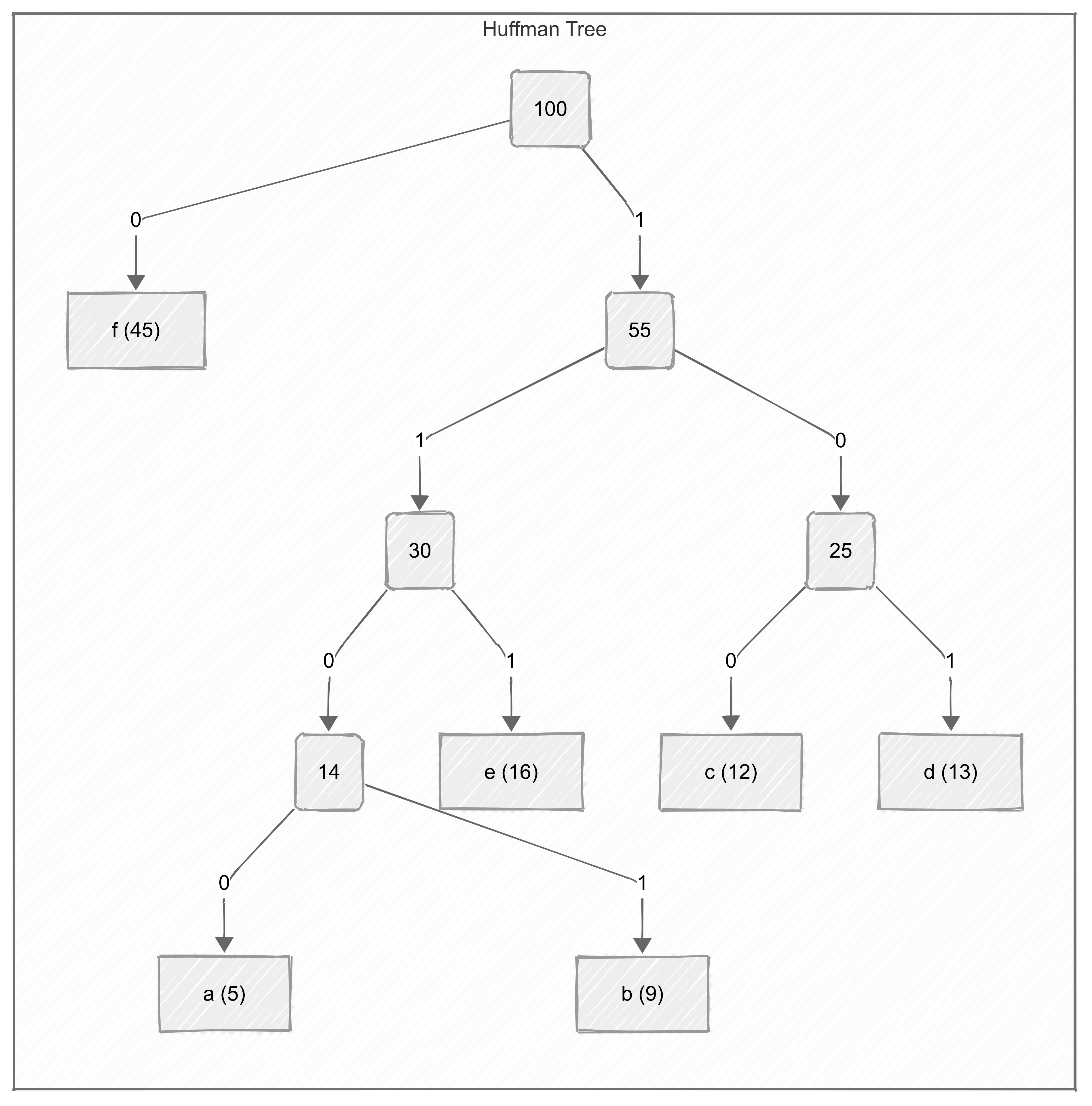

Huffman Coding

Problem Statement: Design optimal prefix-free binary codes for characters based on their frequencies. Mathematical Formulation: Minimize: where is frequency and is code length for character Greedy Strategy:

- Build a min-heap of nodes (characters with frequencies).

- Repeatedly extract two nodes with smallest frequencies, combine them into a new node with summed frequency, and reinsert.

- Continue until one node remains (the root of the Huffman tree).

- Assign binary codes by traversing the tree (left=0, right=1).

Huffman Codes Generated:

| Character | Frequency | Huffman Code | Code Length |

|---|---|---|---|

| f | 45 | 0 | 1 |

| e | 16 | 111 | 3 |

| d | 13 | 101 | 3 |

| c | 12 | 100 | 3 |

| b | 9 | 1101 | 4 |

| a | 5 | 1100 | 4 |

import heapq

from collections import defaultdict

class Node:

def __init__(self, char, freq):

self.char = char

self.freq = freq

self.left = None

self.right = None

def __lt__(self, other):

return self.freq < other.freq

def huffman_coding(frequencies):

"""

frequencies: dict of {char: freq}

returns: dict of {char: huffman_code}

"""

# Build priority queue (min-heap)

heap = [Node(char, freq) for char, freq in frequencies.items()]

heapq.heapify(heap)

# Build Huffman Tree

while len(heap) > 1:

left = heapq.heappop(heap)

right = heapq.heappop(heap)

merged = Node(None, left.freq + right.freq)

merged.left = left

merged.right = right

heapq.heappush(heap, merged)

root = heap[0]

# Generate codes

codes = {}

def generate_codes(node, current_code):

if node:

if node.char is not None:

codes[node.char] = current_code

generate_codes(node.left, current_code + "0")

generate_codes(node.right, current_code + "1")

generate_codes(root, "")

return codes

# Example

if __name__ == "__main__":

freqs = {'a': 5, 'b': 9, 'c': 12, 'd': 13, 'e': 16, 'f': 45}

huff_codes = huffman_coding(freqs)

print("Huffman Codes:", huff_codes)Time Complexity: for building the tree; space for codes.

Minimum Spanning Tree (MST) - Prim's and Kruskal's Algorithms

Problem Statement: Given a connected, undirected graph, find a subset of edges that forms a tree including every vertex, where the total edge weight is minimized.

The algorithm is greedy because at each step, it chooses the minimum weight edge available that maintains the tree property (no cycles).

Mathematical Formulation: Minimize: where

- is the set of edges in the spanning tree

- is the weight of edge

Greedy Strategies:

- Prim's Algorithm: Start from an arbitrary vertex, grow the MST by adding the smallest edge that connects a vertex in the MST to a vertex outside it.

- Initialize a min-heap (priority queue) with the starting vertex and its edges.

- While the min-heap is not empty:

- Extract the minimum weight edge.

- If the edge connects a vertex outside the MST, add it to the MST and include the new vertex.

- Otherwise, discard the edge.

import heapq

def prim_mst(graph, start=0):

"""

graph: adjacency list {node: [(neighbor, weight), ...]}

start: starting vertex

returns: list of edges in the MST and total weight

"""

mst_edges = []

total_weight = 0

visited = set()

min_heap = [(0, start, None)] # (weight, current_node, from_node)

while min_heap:

weight, u, from_node = heapq.heappop(min_heap)

if u in visited:

continue

visited.add(u)

total_weight += weight

if from_node is not None:

mst_edges.append((from_node, u, weight))

for v, w in graph[u]:

if v not in visited:

heapq.heappush(min_heap, (w, v, u))

return mst_edges, total_weight

# Example

if __name__ == "__main__":

graph = {

0: [(1, 4), (2, 1)],

1: [(0, 4), (2, 2), (3, 5)],

2: [(0, 1), (1, 2), (3, 8)],

3: [(1, 5), (2, 8)]

}

mst, weight = prim_mst(graph)

print("MST Edges:", mst)

print("Total Weight:", weight)Time Complexity: using a priority queue; space for visited set and heap.

- Kruskal's Algorithm: Sort all edges by weight, and add them one by one to the MST, skipping those that would form a cycle (using Union-Find to detect cycles).

- Sort edges by weight.

- Initialize a Union-Find structure to track connected components.

- Iterate through sorted edges:

- If the edge connects two different components, add it to the MST and union the components

class UnionFind:

def __init__(self, size):

self.parent = list(range(size))

self.rank = [1] * size

def find(self, u):

if self.parent[u] != u:

self.parent[u] = self.find(self.parent[u])

return self.parent[u]

def union(self, u, v):

root_u = self.find(u)

root_v = self.find(v)

if root_u != root_v:

if self.rank[root_u] > self.rank[root_v]:

self.parent[root_v] = root_u

elif self.rank[root_u] < self.rank[root_v]:

self.parent[root_u] = root_v

else:

self.parent[root_v] = root_u

self.rank[root_u] += 1

def kruskal_mst(edges, num_vertices):

"""

edges: list of (weight, u, v)

num_vertices: number of vertices in the graph

returns: list of edges in the MST and total weight

"""

edges.sort() # Sort edges by weight

uf = UnionFind(num_vertices)

mst_edges = []

total_weight = 0

for weight, u, v in edges:

if uf.find(u) != uf.find(v):

uf.union(u, v)

mst_edges.append((u, v, weight))

total_weight += weight

return mst_edges, total_weight

# Example

if __name__ == "__main__":

edges = [

(4, 0, 1), (1, 0, 2), (2, 1, 2),

(5, 1, 3), (8, 2, 3)

]

num_vertices = 4

mst, weight = kruskal_mst(edges, num_vertices)

print("MST Edges:", mst)

print("Total Weight:", weight)Dynamic Programming

What is Dynamic Programming? Dynamic Programming is a method for solving complex problems by breaking them down into simpler subproblems and storing the results of these subproblems to avoid redundant computations. It is particularly effective for optimization problems where the solution can be constructed from solutions to smaller subproblems. Formal Definition: A problem exhibits optimal substructure if an optimal solution to the problem contains optimal solutions to its subproblems. It exhibits overlapping subproblems if the same subproblems are solved multiple times during the computation of the overall solution.

Core Principles The dynamic programming paradigm operates on three fundamental principles:

-

Optimal Substructure: The optimal solution to a problem can be constructed from optimal solutions to its subproblems.

-

Overlapping Subproblems: The problem can be broken down into subproblems that

are reused multiple times.

-

Memoization or Tabulation: Store the results of subproblems to avoid redundant computations.

Essential Properties of Dynamic Programming

Deterministic Nature: Given the same input, a dynamic programming algorithm will always produce the same output, making it predictable and analyzable. Optimal Substructure: If a problem can be divided into subproblems, and the optimal solution to the overall problem can be constructed from the optimal solutions of its subproblems, then the problem has optimal substructure. This property is crucial for the applicability of dynamic programming. Overlapping Subproblems: If a problem can be broken down into subproblems that are solved multiple times, then the problem has overlapping subproblems. This property allows dynamic programming to store the results of these subproblems and reuse them, significantly improving efficiency. Memory Efficiency: Dynamic programming algorithms often require additional memory to store the results of subproblems, which can lead to increased space complexity. However, techniques such as space optimization can be employed to reduce memory usage in certain cases.

Classic Dynamic Programming Examples

Fibonacci Sequence

Problem Statement: Compute the n-th Fibonacci number, where each number is the sum of the two preceding ones, starting from 0 and 1. Mathematical Formulation:

- Base Cases:

- Recurrence Relation: for

# Recursive with Memoization

def fibonacci_memo(n, memo={}):

if n in memo:

return memo[n]

if n <= 1:

return n

memo[n] = fibonacci_memo(n-1, memo) + fibonacci_memo(n-2, memo)

return memo[n]

# Iterative with Tabulation

def fibonacci_tab(n):

if n <= 1:

return n

fib = [0] * (n + 1)

fib[1] = 1

for i in range(2, n + 1):

fib[i] = fib[i - 1] + fib[i - 2]

return fib[n]

# Space Optimized Iterative

def fibonacci_optimized(n):

if n <= 1:

return n

a, b = 0, 1

for _ in range(2, n + 1):

a, b = b, a + b

return b

# Example

if __name__ == "__main__":

n = 10

print(f"Fibonacci({n}) with memoization:", fibonacci_memo(n))

print(f"Fibonacci({n}) with tabulation:", fibonacci_tab(n))

print(f"Fibonacci({n}) optimized:", fibonacci_optimized(n))0/1 Knapsack Problem

Problem Statement: Given weights and values of items, put these items in a knapsack of capacity to get the maximum total value in the knapsack. Each item can be included or excluded .

Mathematical Formulation:

- Let be the value and be the weight of item

- Let be the maximum value that can be attained with a knapsack capacity using the first items.

- Recurrence Relation:

- for all

- for all

- if

- if

def knapsack_01(weights, values, W):

n = len(values)

dp = [[0 for _ in range(W + 1)] for _ in range(n + 1)]

for i in range(1, n + 1):

for w in range(W + 1):

if weights[i - 1] <= w:

dp[i][w] = max(dp[i - 1][w], values[i - 1] + dp[i - 1][w - weights[i - 1]])

else:

dp[i][w] = dp[i - 1][w]

return dp[n][W]

# Example

if __name__ == "__main__":

weights = [3, 4, 6, 5]

values = [2, 3, 1, 4]

W = 8

print("Maximum value in Knapsack =", knapsack_01(weights, values, W))

# Output: 6DP table (rows = items added, columns = capacity 0-8):

| items\capacity | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|---|

| 0 (0,0) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 (3,2) | 0 | 0 | 0 | 2 | 2 | 2 | 2 | 2 | 2 |

| 2 (4,3) | 0 | 0 | 0 | 2 | 3 | 3 | 3 | 5 | 5 |

| 3 (5,4) | 0 | 0 | 0 | 2 | 3 | 4 | 4 | 5 | 6 |

| 4 (6,1) | 0 | 0 | 0 | 2 | 3 | 4 | 4 | 5 | 6 |

Longest Common Subsequence (LCS)

Problem Statement: Given two sequences, find the length of the longest subsequence present in both of them. A subsequence is a sequence that appears in the same relative order but not necessarily contiguous.

Mathematical Formulation:

- Let and

- Let be the length of LCS of and

- Recurrence Relation:

- for all

- for all

- if

- if

def lcs(X, Y):

m = len(X)

n = len(Y)

dp = [[0] * (n + 1) for _ in range(m + 1)]

for i in range(1, m + 1):

for j in range(1, n + 1):

if X[i - 1] == Y[j - 1]:

dp[i][j] = dp[i - 1][j - 1] + 1

else:

dp[i][j] = max(dp[i - 1][j], dp[i][j - 1])

return dp[m][n]

# Example

if __name__ == "__main__":

X = "AGGTAB"

Y = "GXTXAYB"

print("Length of LCS is", lcs(X, Y)) # Output: 4DP table (rows = chars of X, columns = chars of Y):

| 0 | 1 (G) | 2 (X) | 3 (T) | 4 (X) | 5 (A) | 6 (Y) | 7 (B) | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 (A) | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 |

| 2 (G) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 3 (G) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 4 (T) | 0 | 1 | 1 | 2 | 2 | 2 | 2 | 2 |

| 5 (A) | 0 | 1 | 1 | 2 | 2 | 3 | 3 | 3 |

| 6 (B) | 0 | 1 | 1 | 2 | 2 | 3 | 3 | 4 |

Time Complexity: where and are the lengths of the two sequences. Space Complexity: for the DP table; can be optimized to by storing only the current and previous rows.

Edit Distance (Levenshtein Distance)

Problem Statement: Given two strings, find the minimum number of operations (insertions, deletions, substitutions) required to convert one string into the other.

Mathematical Formulation:

- Let and

- Let be the edit distance between and

- Recurrence Relation:

- for all (all insertions)

- for all

- if

- if

def edit_distance(X, Y):

m = len(X)

n = len(Y)

dp = [[0] * (n + 1) for _ in range(m + 1)]

for i in range(m + 1):

for j in range(n + 1):

if i == 0:

dp[i][j] = j # Insert all j characters

elif j == 0:

dp[i][j] = i # Remove all i characters

elif X[i - 1] == Y[j - 1]:

dp[i][j] = dp[i - 1][j - 1]

else:

dp[i][j] = 1 + min(dp[i - 1][j], dp[i][j - 1], dp[i - 1][j - 1])

return dp[m][n]

# Example

if __name__ == "__main__":

X = "kitten"

Y = "sitting"

print("Edit Distance is", edit_distance(X, Y)) # Output: 3Time Complexity: where and are the lengths of the two strings. Space Complexity: for the DP table; can be optimized to by storing only the current and previous rows.

Longest Increasing Subsequence (LIS)

Given an integer array nums, return the length of the longest strictly increasing subsequence.

Recursive Solution:

class Solution:

def lengthOfLIS(self, nums: List[int]) -> int:

n = len(nums)

return self.LIS(0, -1, nums, n)

def lengthOfLIS(self, nums: List[int]) -> int:

n = len(nums)

dp = [[-1] * (n + 1) for _ in range(n)]

return self.LIS_DP(0, -1, nums, n, dp)

def LIS(self, int index, int prev_index, int[] nums, int n) -> int:

if index == n:

return 0

take = 0

if prev_index == -1 or nums[index] > nums[prev_index]:

take = 1 + self.LIS(index + 1, index, nums, n)

not_take = self.LIS(index + 1, prev_index, nums, n)

return max(take, not_take)

def LIS_DP(self, index, prev_index, nums, n, dp) -> int:

if index == n:

return 0

if dp[index][prev_index] != -1:

return dp[index][prev_index]

take = 0

if prev_index == -1 or nums[index] > nums[prev_index]:

take = 1 + self.LIS_DP(index + 1, index, nums, n, dp)

not_take = self.LIS_DP(index + 1, prev_index, nums, n, dp)

dp[index][prev_index] = max(take, not_take)

return dp[index][prev_index]Mathematical Formulation:

- Let

- Let be the length of the longest increasing subsequence ending at index

- Recurrence Relation:

- for all and

Tabular Solution:

def lis(A):

n = len(A)

dp = [1] * n

for i in range(1, n):

for j in range(i):

if A[i] > A[j]:

dp[i] = max(dp[i], dp[j] + 1)

return max(dp)

# Example

if __name__ == "__main__":

A = [10, 9, 2, 5, 3, 7, 101, 18]

print("Length of LIS is", lis(A)) # Output: 4DP table (rows = index, columns = length of LIS):

| 0(10) | 1(9) | 2(2) | 3(5) | 4(3) | 5(7) | 6(101) | 7(18) | |

|---|---|---|---|---|---|---|---|---|

| 0 (10) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1 (9) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 (2) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 3 (5) | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 |

| 4 (3) | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 |

| 5 (7) | 1 | 1 | 1 | 2 | 2 | 3 | 3 | 3 |

| 6 (101) | 1 | 1 | 1 | 2 | 2 | 3 | 4 | 4 |

| 7 (18) | 1 | 1 | 1 | 2 | 2 | 3 | 4 | 4 |

Time Complexity: where is the length of the array. Space Complexity: for the DP table.

Graph Data Structures and Algorithms

Graph Data Structures

Graphs are abstract data types that consist of a set of nodes (or vertices) and a set of edges connecting pairs of nodes. They are used to model relationships and connections in various domains, such as social networks, transportation systems, and communication networks.

Graph Representations

-

Adjacency Matrix: A 2D array where the cell at row

iand columnjindicates the presence (and possibly weight) of an edge between vertexiand vertexj. This representation is efficient for dense graphs but can be space-inefficient for sparse graphs.- Space Complexity: where is the number of vertices.

- Time Complexity: for edge look-up, for iterating over neighbors.

class GraphAdjMatrix: def __init__(self, vertices): self.V = vertices self.adj_matrix = [[0] * vertices for _ in range(vertices)] def add_edge(self, u, v, weight=1): self.adj_matrix[u][v] = weight self.adj_matrix[v][u] = weight # For undirected graph def remove_edge(self, u, v): self.adj_matrix[u][v] = 0 self.adj_matrix[v][u] = 0 def display(self): for row in self.adj_matrix: print(row) -

Adjacency List: A list where each vertex has a list of its adjacent vertices (and possibly edge weights). This representation is more space-efficient for sparse graphs.

- Space Complexity: where is the number of edges.

- Time Complexity: for edge look-up, for iterating over neighbors.

class GraphAdjList: def __init__(self): self.adj_list = {} def add_edge(self, u, v, weight=1): if u not in self.adj_list: self.adj_list[u] = [] if v not in self.adj_list: self.adj_list[v] = [] self.adj_list[u].append((v, weight)) self.adj_list[v].append((u, weight)) # For undirected graph def remove_edge(self, u, v): if u in self.adj_list: self.adj_list[u] = [pair for pair in self.adj_list[u] if pair[0] != v] if v in self.adj_list: self.adj_list[v] = [pair for pair in self.adj_list[v] if pair[0] != u] def display(self): for key in self.adj_list: print(f"{key}: {self.adj_list[key]}")

Breadth First Search (BFS)

Given an undirected graph, return a vector of all nodes by traversing the graph using breadth-first search (BFS).

from collections import deque

def BFS(graph, start):

visited = set()

queue = deque([start])

visited.add(start)

while queue:

node = queue.popleft()

print(node)

for neighbor in graph[node]:

if neighbor not in visited:

visited.add(neighbor)

queue.append(neighbor)

graph = {

'A': ['B', 'C'],

'B': ['A', 'D'],

'C': ['A', 'D'],

'D': ['B', 'C']

}

BFS(graph, 'A')Depth First Search (DFS)

Given an undirected graph, return a vector of all nodes by traversing the graph using depth-first search (DFS).

from collections import deque

def DFS(graph, start):

visited = set()

stack = deque([start])

visited.add(start)

while stack:

node = stack.pop()

print(node)

for neighbor in graph[node]:

if neighbor not in visited:

visited.add(neighbor)

stack.append(neighbor)

visited = set()

def DFS_recursive(graph, start, visited):

visited.add(start)

print(start)

for neighbor in graph[start]:

if neighbor not in visited:

DFS_recursive(graph, neighbor, visited)

graph = {

'A': ['B', 'C'],

'B': ['A', 'D'],

'C': ['A', 'D'],

'D': ['B', 'C']

}

DFS(graph, 'A')

DFS_recursive(graph, 'A', visited)Some important Alogorithms

Kadane's Algorithm : Maximum Subarray Sum in an Array

The intuition behind Kadane's Algorithm is to iterate through the array while maintaining a running sum of the current subarray. If the running sum becomes negative, it is reset to zero. The maximum sum encountered during the iteration is the result.

Steps:

- Iterate over the array with a loop (index i).

- Add each element to a running sum and update the maximum sum when the running sum is larger.

- If the running sum becomes negative, reset it to 0 since continuing from a negative sum cannot improve the result.

def maxSubarraySum(arr, n):

maximum_sum = float('-inf')

sum = 0

start = 0

subarrayStartIndex, subarrayEndIndex = -1, -1

for i in range(n):

if sum == 0:

start = i

sum += arr[i]

if sum > maximum_sum:

maximum_sum = sum

subarrayStartIndex = start

subarrayEndIndex = i

# If sum < 0: discard the sum calculated

if sum < 0:

sum = 0

print("The subarray is: [", end="")

for i in range(subarrayStartIndex, subarrayEndIndex + 1):

print(arr[i], end=" ")

print("]")

return maximum_sum

arr = [-2, 1, -3, 4, -1, 2, 1, -5, 4]

n = len(arr)

maxSum = maxSubarraySum(arr, n)

print("The maximum subarray sum is:", maxSum)Some Good Questions to Solve

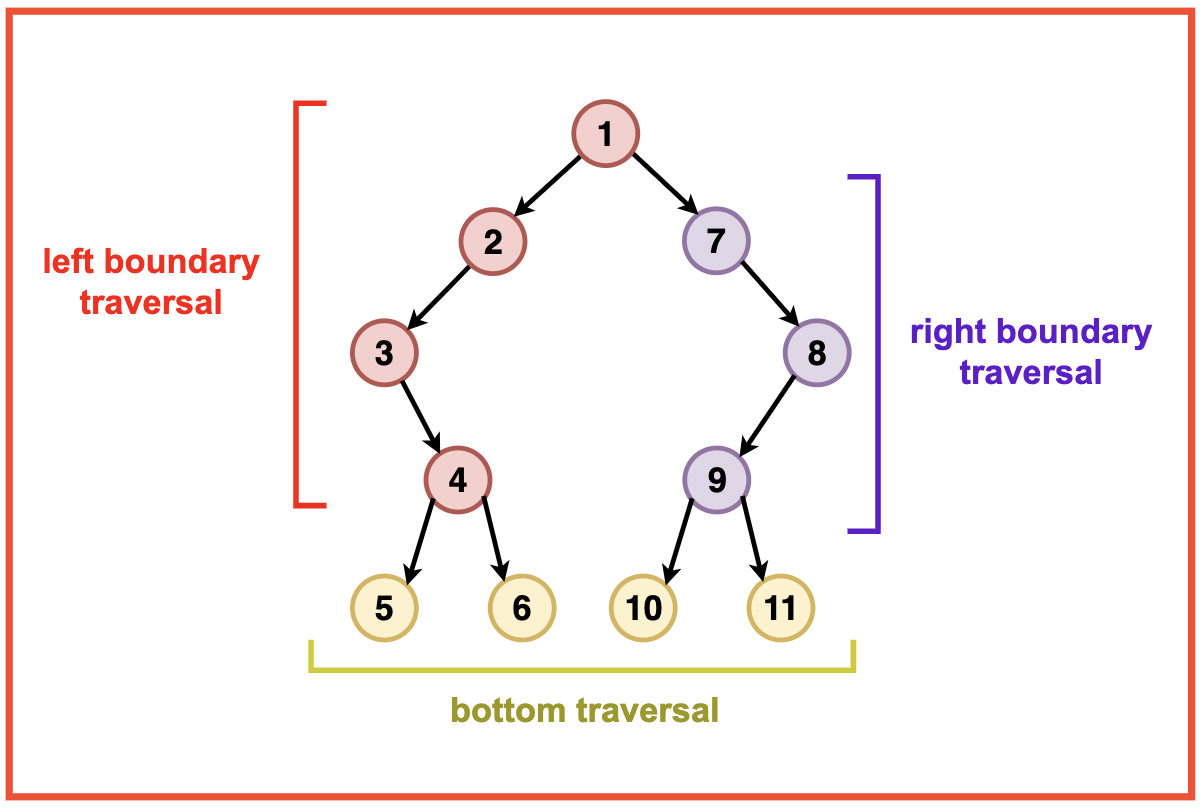

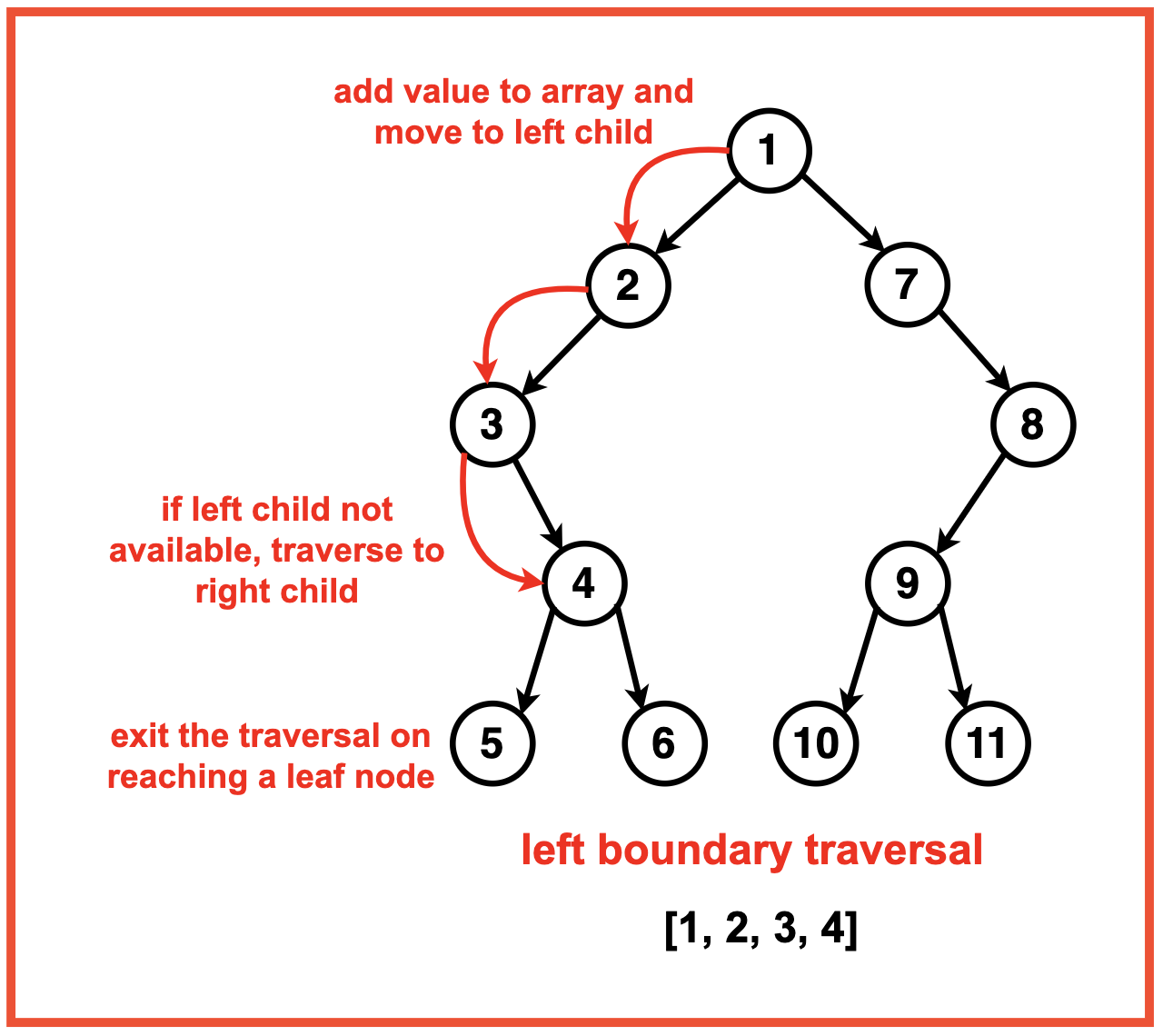

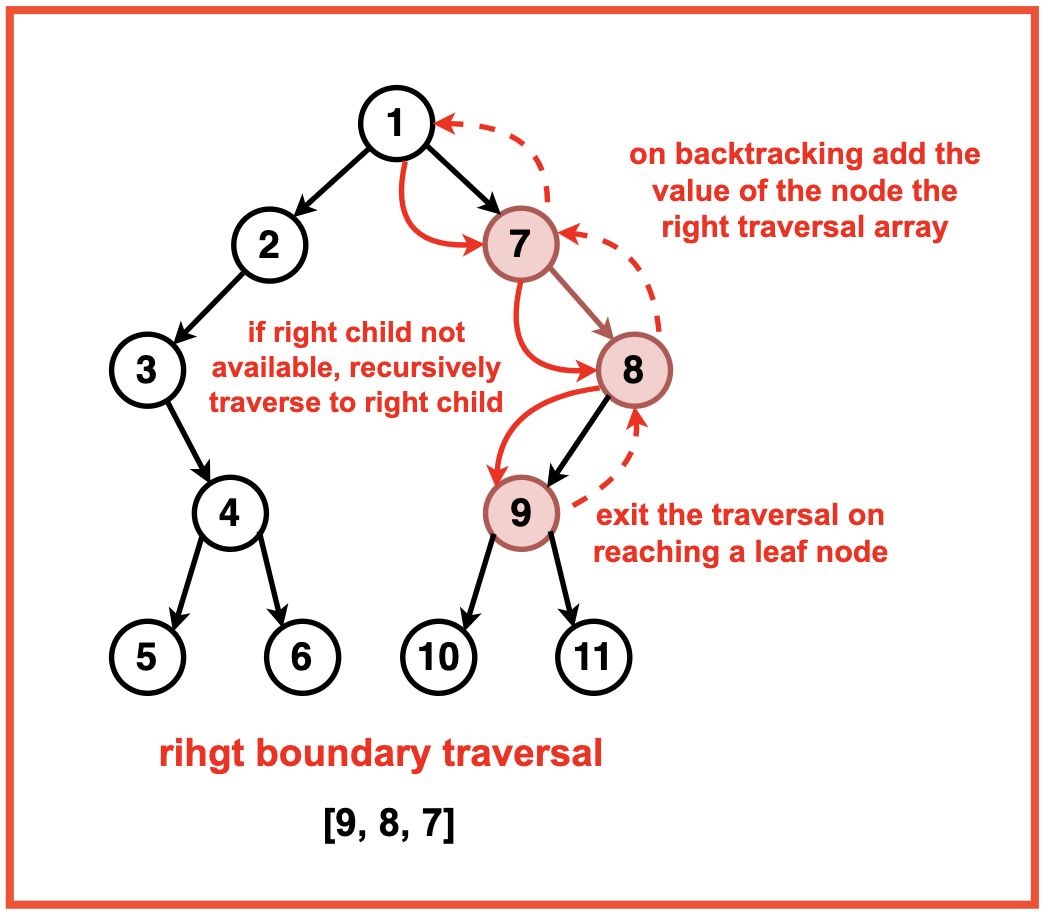

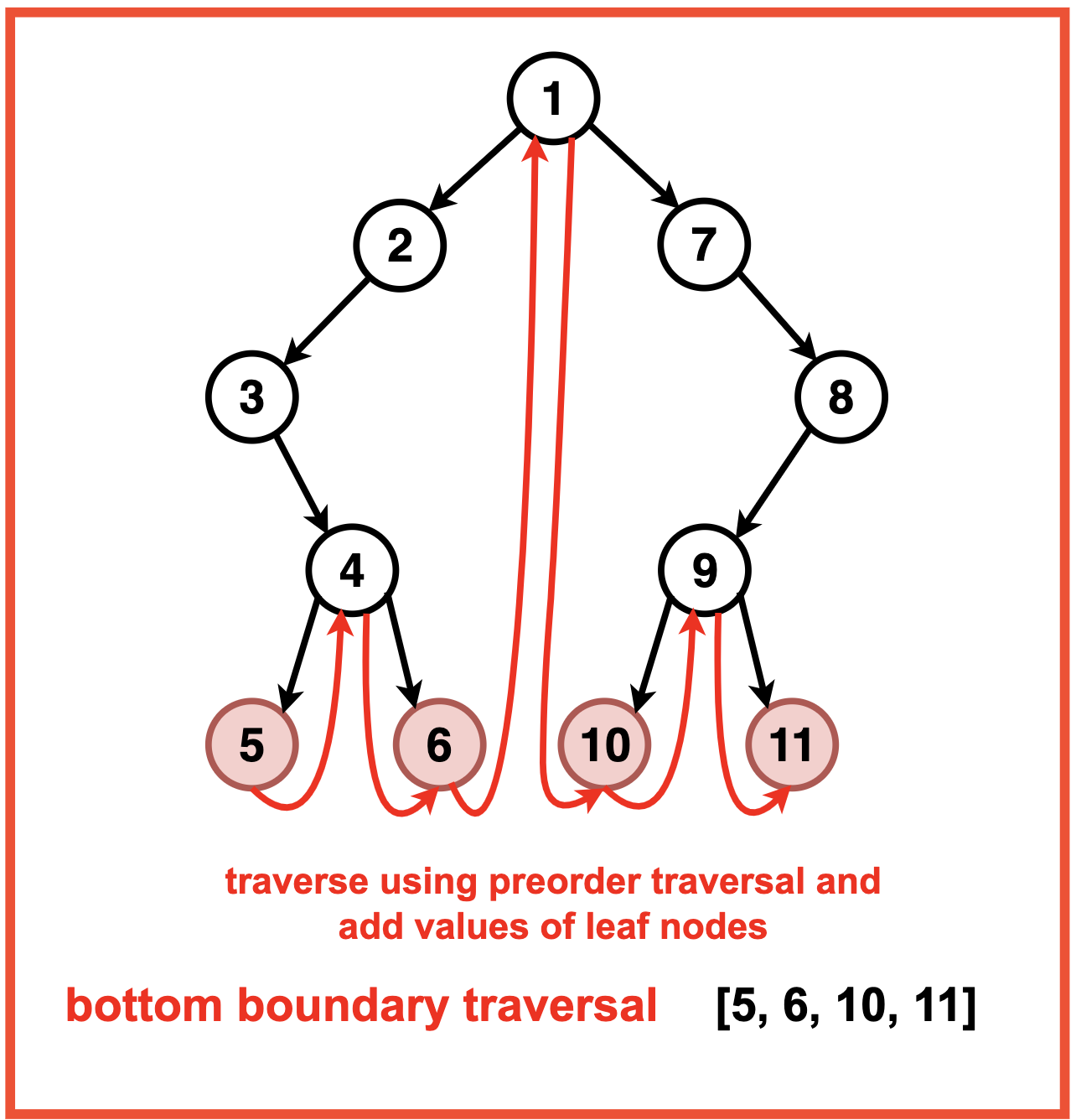

Boundary Traversal of a Binary

# Definition for a binary tree node.

# class TreeNode(object):

# def __init__(self, val=0, left=None, right=None):

# self.data = val

# self.left = left

# self.right = right

class Solution:

# Function to check if a node is a leaf

def isLeaf(self, root):

return not root.left and not root.right

# Function to add the left boundary of the tree

def addLeftBoundary(self, root, res):

curr = root.left

while curr:

if not self.isLeaf(curr):

res.append(curr.data)

if curr.left:

curr = curr.left

else:

curr = curr.right

# Function to add the right boundary of the tree

def addRightBoundary(self, root, res):

curr = root.right

temp = []

while curr:

if not self.isLeaf(curr):

temp.append(curr.data)

if curr.right:

curr = curr.right

else:

curr = curr.left

res.extend(temp[::-1])

# Function to add the leaves of the tree

def addLeaves(self, root, res):

if self.isLeaf(root):

res.append(root.data)

return

if root.left:

self.addLeaves(root.left, res)

if root.right:

self.addLeaves(root.right, res)

# Main function to perform the boundary traversal of the binary tree

def boundary(self, root):

res = []

if not root:

return res

if not self.isLeaf(root):

res.append(root.data)

self.addLeftBoundary(root, res)

self.addLeaves(root, res)

self.addRightBoundary(root, res)

return res

# Helper function to print the result

def printResult(result):

for val in result:

print(val, end=" ")

print()

# Creating a sample binary tree

root = TreeNode(1)

root.left = TreeNode(2)

root.right = TreeNode(3)

root.left.left = TreeNode(4)

root.left.right = TreeNode(5)

root.right.left = TreeNode(6)

root.right.right = TreeNode(7)

solution = Solution()

# Get the boundary traversal

result = solution.boundary(root)

# Print the result

print("Boundary Traversal: ", end="")

printResult(result)

Construct Binary Tree from String with Bracket Representation

4(2(3)(1))(6(5))

│ └──┬──┘ └─┬─┘

│ │ └── Right child of 4: node 6 with left child 5

│ └── Left child of 4: node 2 with children 3 and 1

└── Root value: 4

Tree Structure:

4

/ \

2 6

/ \ /

3 1 5Use Recursion with Index Tracking Since the string has a recursive structure, we can use recursion to parse it. The tricky part is keeping track of our position in the string as we parse.

Algorithm Steps:

- Parse the number at the current position (handle negative numbers too)

- Create a node with that value

- Check for '(' - if found, recursively build the left subtree

- Check for '(' again - if found, recursively build the right subtree

- Skip ')' when returning from recursion

- Return the node

class Node:

def __init__(self, val):

self.data = val

self.left = None

self.right = None

class Solution:

def treeFromString(self, s):

if not s:

return None

self.index = 0 # Track current position in string

return self.buildTree(s)

def buildTree(self, s):

if self.index >= len(s):

return None

# Step 1: Parse the number (could be negative or multi-digit)

num = 0

negative = False

if s[self.index] == '-':

negative = True

self.index += 1

while self.index < len(s) and s[self.index].isdigit():

num = num * 10 + int(s[self.index])

self.index += 1

if negative:

num = -num

# Step 2: Create node with parsed value

node = Node(num)

# Step 3: Check for left child (first parenthesis pair)

if self.index < len(s) and s[self.index] == '(':

self.index += 1 # Skip '('

node.left = self.buildTree(s)

self.index += 1 # Skip ')'

# Step 4: Check for right child (second parenthesis pair)

if self.index < len(s) and s[self.index] == '(':

self.index += 1 # Skip '('

node.right = self.buildTree(s)

self.index += 1 # Skip ')'

return nodeNumber of Islands

Given an m x n 2D binary grid grid which represents a map of '1's (land) and '0's (water), return the number of islands.

An island is surrounded by water and is formed by connecting adjacent lands horizontally or vertically. You may assume all four edges of the grid are all surrounded by water.

Example 1:

grid = [

["1","1","1","1","0"],

["1","1","0","1","0"],

["1","1","0","0","0"],

["0","0","0","0","0"]

]Output: 1

Example 2:

grid = [

["1","1","0","0","0"],

["1","1","0","0","0"],

["0","0","1","0","0"],

["0","0","0","1","1"]

]Output: 3

Solution

class Solution:

def numIslands(self, grid: List[List[str]]) -> int:

directions = [[1, 0], [-1, 0], [0, 1], [0, -1]]

ROWS, COLS = len(grid), len(grid[0])

islands = 0

def bfs(r, c):

q = deque()

grid[r][c] = "0"

q.append((r, c))

while q:

row, col = q.popleft()

for dr, dc in directions:

nr, nc = dr + row, dc + col

if (nr < 0 or nc < 0 or nr >= ROWS or

nc >= COLS or grid[nr][nc] == "0"

):

continue

q.append((nr, nc))

grid[nr][nc] = "0"

for r in range(ROWS):

for c in range(COLS):

if grid[r][c] == "1":

bfs(r, c)

islands += 1

return islandsGenerate Parentheses

Given n pairs of parentheses, write a function to generate all combinations of well-formed parentheses.

Example 1: Input: n = 3 Output: ["((()))","(()())","(())()","()(())","()()()"]

Example 2: Input: n = 1 Output: ["()"]

class Solution:

def generateParenthesis(self, n: int) -> List[str]:

stack = []

res = []

def backtrack(openN, closedN):

if openN == closedN == n:

res.append("".join(stack))

return

if openN < n:

stack.append("(")

backtrack(openN + 1, closedN)

stack.pop()

if closedN < openN:

stack.append(")")

backtrack(openN, closedN + 1)

stack.pop()

backtrack(0, 0)

return resTarget Sum

You are given an integer array nums and an integer target.

You want to build an expression out of nums by adding one of the symbols '+' and '-' before each integer in nums and then concatenate all the integers.

For example, if nums = [2, 1], you can add a '+' before 2 and a '-' before 1 and concatenate them to build the expression "+2-1". Return the number of different expressions that you can build, which evaluates to target.

Example 1: Input: Output: 5

Explanation: There are 5 ways to assign symbols to make the sum of nums be target 3. -1 + 1 + 1 + 1 + 1 = 3 +1 - 1 + 1 + 1 + 1 = 3 +1 + 1 - 1 + 1 + 1 = 3 +1 + 1 + 1 - 1 + 1 = 3 +1 + 1 + 1 + 1 - 1 = 3

Example 2: Input: Output: 1

Constraints:

class Solution:

def findTargetSumWays(self, nums: List[int], target: int) -> int:

dp = {} # (index, total) -> # of ways

def backtrack(index, total):

if index == len(nums):

return 1 if total == target else 0

if (index, total) in dp:

return dp[(index, total)]

dp[(index, total)] = backtrack(index + 1, total + nums[index])

dp[(index, total)] += backtrack(index + 1, total - nums[index])

return dp[(index, total)]

return backtrack(0, 0)Minimum Cost for Tickets

You have planned some train traveling one year in advance. The days of the year in which you will travel are given as an integer array days. Each day is an integer from 1 to 365.

Train tickets are sold in three different ways:

a 1-day pass is sold for costs[0] dollars, a 7-day pass is sold for costs[1] dollars, and a 30-day pass is sold for costs[2] dollars. The passes allow that many days of consecutive travel.

For example, if we get a 7-day pass on day 2, then we can travel for 7 days: 2, 3, 4, 5, 6, 7, and 8. Return the minimum number of dollars you need to travel every day in the given list of days.

Example 1: Input: Output: 11 Explanation: For example, here is one way to buy passes that lets you travel your travel plan: On day 1, you bought a 1-day pass for = $2, which covered day 1. On day 3, you bought a 7-day pass for = $7, which covered days 3, 4, ..., 9. On day 20, you bought a 1-day pass for = $2, which covered day 20. In total, you spent $11 and covered all the days of your travel.

Example 2: Input: Output: 17 Explanation: For example, here is one way to buy passes that lets you travel your travel plan: On day 1, you bought a 30-day pass for = $15 which covered days 1, 2, ..., 30. On day 31, you bought a 1-day pass for = $2 which covered day 31. In total, you spent $17 and covered all the days of your travel.

Constraints: days is in strictly increasing order.

class Solution:

def mincostTickets(self, days: List[int], costs: List[int]) -> int:

dp = {}

def DFS(idx):

if idx == len(days):

return 0

if idx in dp:

return dp[idx]

dp[idx] = float("inf")

for d, c in zip([1, 7, 30], costs):

next_day_idx = idx

while next_day_idx < len(days) and days[next_day_idx] < days[idx] + d:

next_day_idx += 1

dp[idx] = min(dp[idx], c + DFS(next_day_idx))

return dp[idx]

return DFS(0)

# DP solution

def mincostTickets_DP(self, days: List[int], costs: List[int]) -> int:

dp = {}

for i in range(len(days) - 1, -1, -1):

dp[i] = float("inf")

for d, c in zip([1, 7, 30], costs):

j = i

while j < len(days) and days[j] < days[i] + d:

j += 1

dp[i] = min(dp[i], c + dp.get(j, 0))

return dp[0]Longest Palindromic Substring

Given a string s, return the longest palindromic substring in s.

Example 1: Input: s = "babad" Output: "bab" Explanation: "aba" is also a valid answer.

Example 2: Input: s = "cbbd" Output: "bb"

Constraints: consist of only digits and English letters.

class Solution:

def longestPalindrome(self, s: str) -> str:

res = ""

resLen = 0

for i in range(len(s)):

# odd length

l, r = i, i

while l >= 0 and r < len(s) and s[l] == s[r]:

if (r - l + 1) > resLen:

res = s[l : r + 1]

resLen = r - l + 1

l -= 1

r += 1

# even length

l, r = i, i + 1

while l >= 0 and r < len(s) and s[l] == s[r]:

if (r - l + 1) > resLen:

res = s[l : r + 1]

resLen = r - l + 1

l -= 1

r += 1

return resPartition Equal Subset Sum

Given an integer array nums, return true if you can partition the array into two subsets such that the sum of the elements in both subsets is equal or false otherwise.

Example 1: Input: nums = [1,5,11,5] Output: true Explanation: The array can be partitioned as [1, 5, 5] and [11].

Example 2: Input: nums = [1,2,3,5] Output: false Explanation: The array cannot be partitioned into equal sum subsets.

Constraints:

class Solution:

def canPartition(self, nums: List[int]) -> bool:

total_sum = sum(nums)

if total_sum % 2 != 0:

return False

target = total_sum // 2

dp = set()

dp.add(0)

for num in nums:

new_dp = set()

for t in dp:

new_dp.add(t + num)

new_dp.add(t)

dp = new_dp

return target in dp

# DP solution

def canPartition_DP(self, nums: List[int]) -> bool:

total_sum = sum(nums)

if total_sum % 2 != 0:

return False

target = total_sum // 2

dp = [False] * (target + 1)

dp[0] = True

for num in nums:

for t in range(target, num - 1, -1):

dp[t] = dp[t] or dp[t - num]

return dp[target]Maximum Product Subarray

Given an integer array nums, find a subarray that has the largest product, and return the product. The test cases are generated so that the answer will fit in a 32-bit integer. Note that the product of an array with a single element is the value of that element.

Example 1: Input: nums = [2,3,-2,4] Output: 6 Explanation: [2,3] has the largest product 6.

Example 2: Input: nums = [-2,0,-1] Output: 0 Explanation: The result cannot be 2, because [-2,-1] is not a subarray.

Constraints: The product of any subarray of nums is guaranteed to fit in a 32-bit integer.

class Solution:

def maxProduct(self, nums: List[int]) -> int:

n = len(nums)

pre, suff = 1, 1

ans = float('-inf')

for i in range(n):

if pre == 0:

pre = 1

if suff == 0:

suff = 1

pre *= nums[i]

suff *= nums[n - i - 1]

ans = max(ans, pre, suff)

return ans